Why this extremely viral poll result might not be real

It's unlikely that Americans are half as patriotic as they were four years ago. Here's what's happening instead.

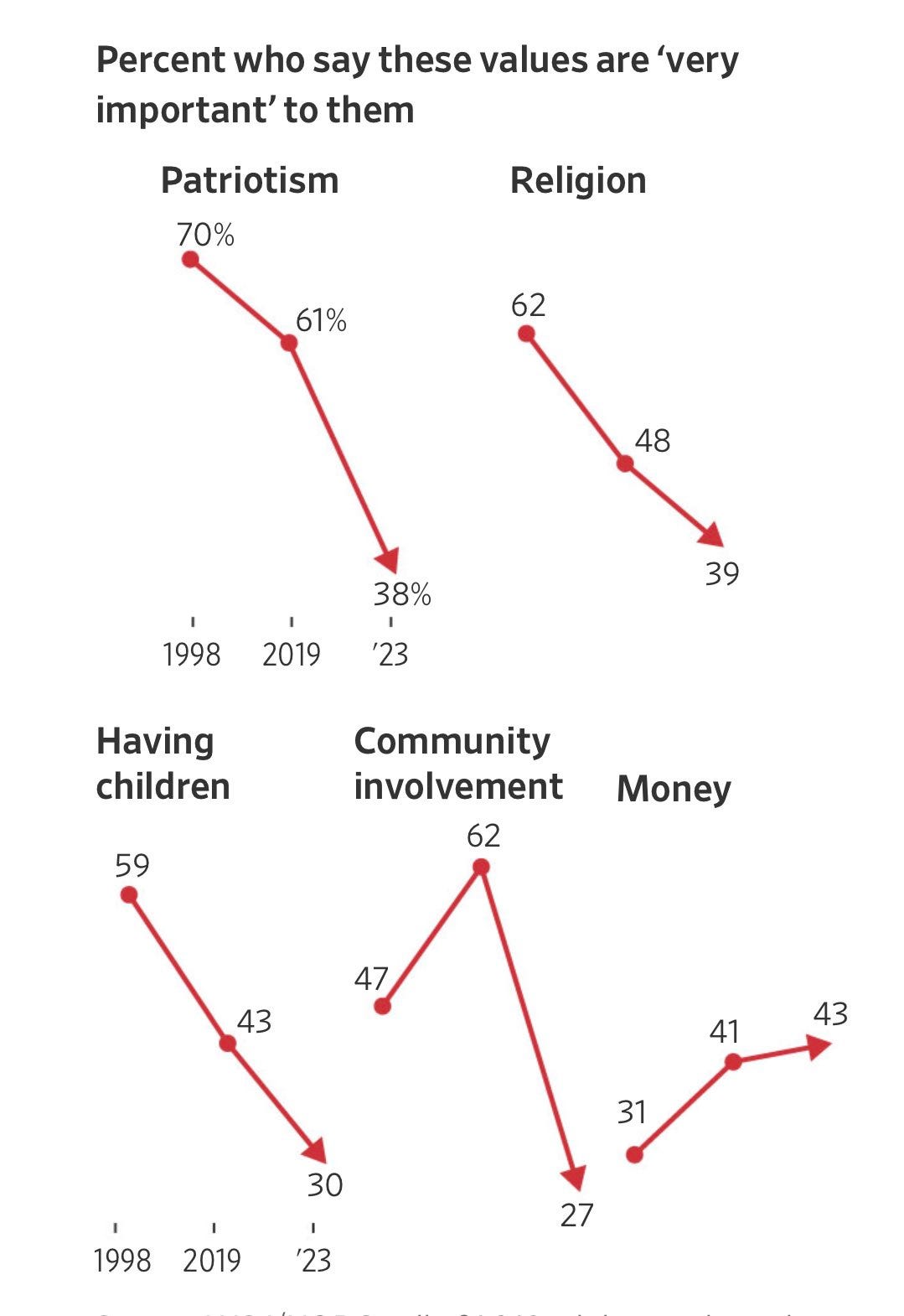

This chart from the March Wall Street Journal/NORC poll was destined to go viral. It shows that the values we think of as defining America—patriotism, having children, religion, community, involvement—are falling off a cliff. And the only thing that people now value more? Money. The decline in old-fashioned values has accelerated over the last few years. In 2019, 61 percent said patriotism was very important to them. Today, that number is 38 percent. Also, just four years ago, 62 percent said the same of community involvement. Now, that number is less than half that: 27 percent.

These findings fit into a declinist narrative we are already predisposed to believe. And that’s what makes this chart so powerful and compelling. It’s exceptionally easy to draw sweeping conclusions from it. For example: